Ray Tracing in One Weekend Reimagined: A Proof of Concept for React Native and Rust

Posted in react-native, rust-lang, raytracer, ray-tracing-for-mobile-apps, native-modules on January 16, 2024 by Hemanta Sapkota ‐ 6 min read

In mobile app development, rendering high-performance graphics, especially for complex tasks like ray tracing, has usually been done in native development. But now, the use of Rust—a language known for its speed and safety—in combination with React Native is changing the approach. This post explains how to integrate a Rust-based ray tracer into a React Native app, providing a realistic example of expanding app capabilities.

- Quick Preview

- What is a Ray Tracer

- The Rust Ray Tracer

- Understanding the Scene Graph

- React Native Architecture for Integrating Rust Ray Tracer

- The Rust Ray Tracer Render Function

- Understanding the Rust Ray Tracer Render Function

- Apple Vision Pro: Challenges and Considerations

- Future Prospects: Spatial Computing with LLM-Augmented AI

- Conclusion

On this page

- Quick Preview

- What is a Ray Tracer

- The Rust Ray Tracer

- Understanding the Scene Graph

- React Native Architecture for Integrating Rust Ray Tracer

- The Rust Ray Tracer Render Function

- Understanding the Rust Ray Tracer Render Function

- Apple Vision Pro: Challenges and Considerations

- Future Prospects: Spatial Computing with LLM-Augmented AI

- Conclusion

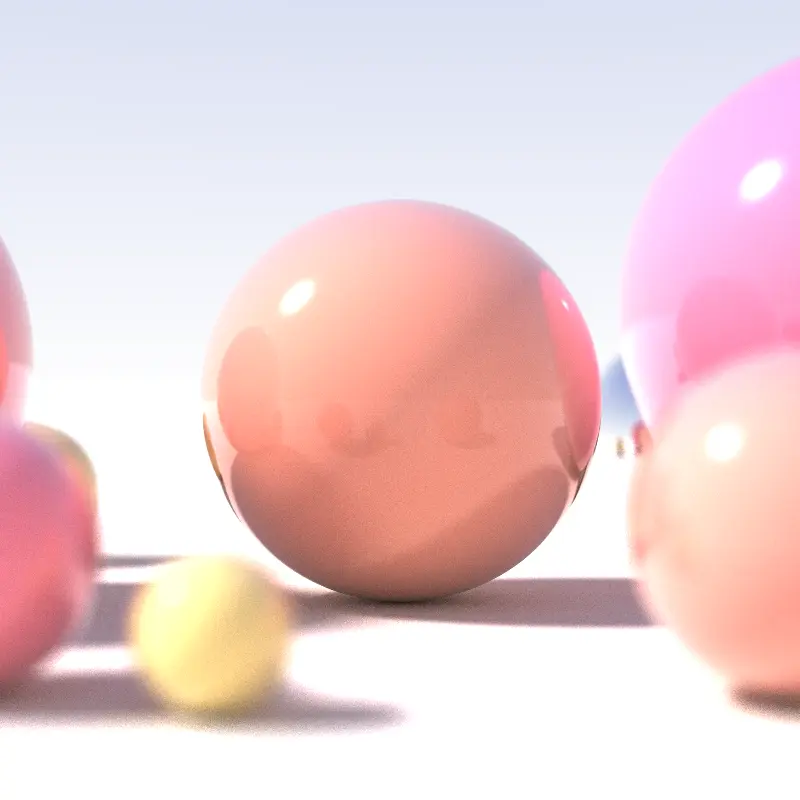

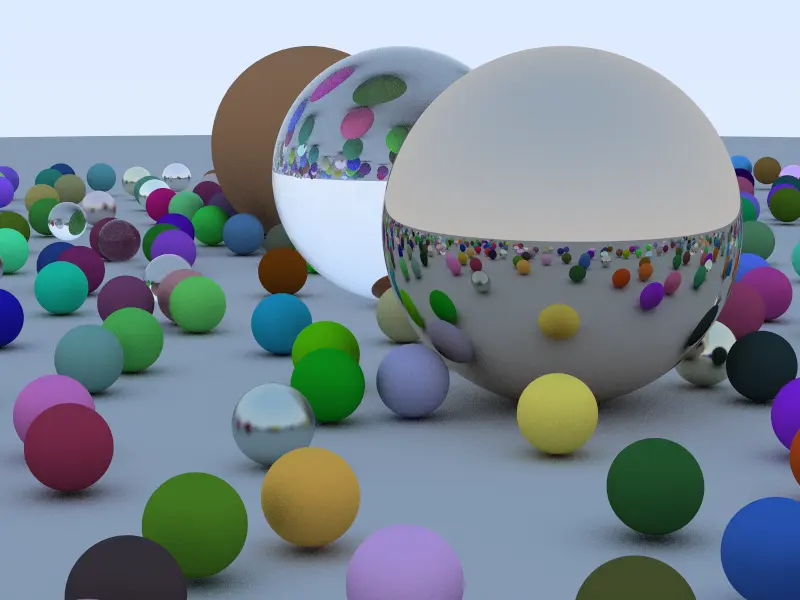

Quick Preview

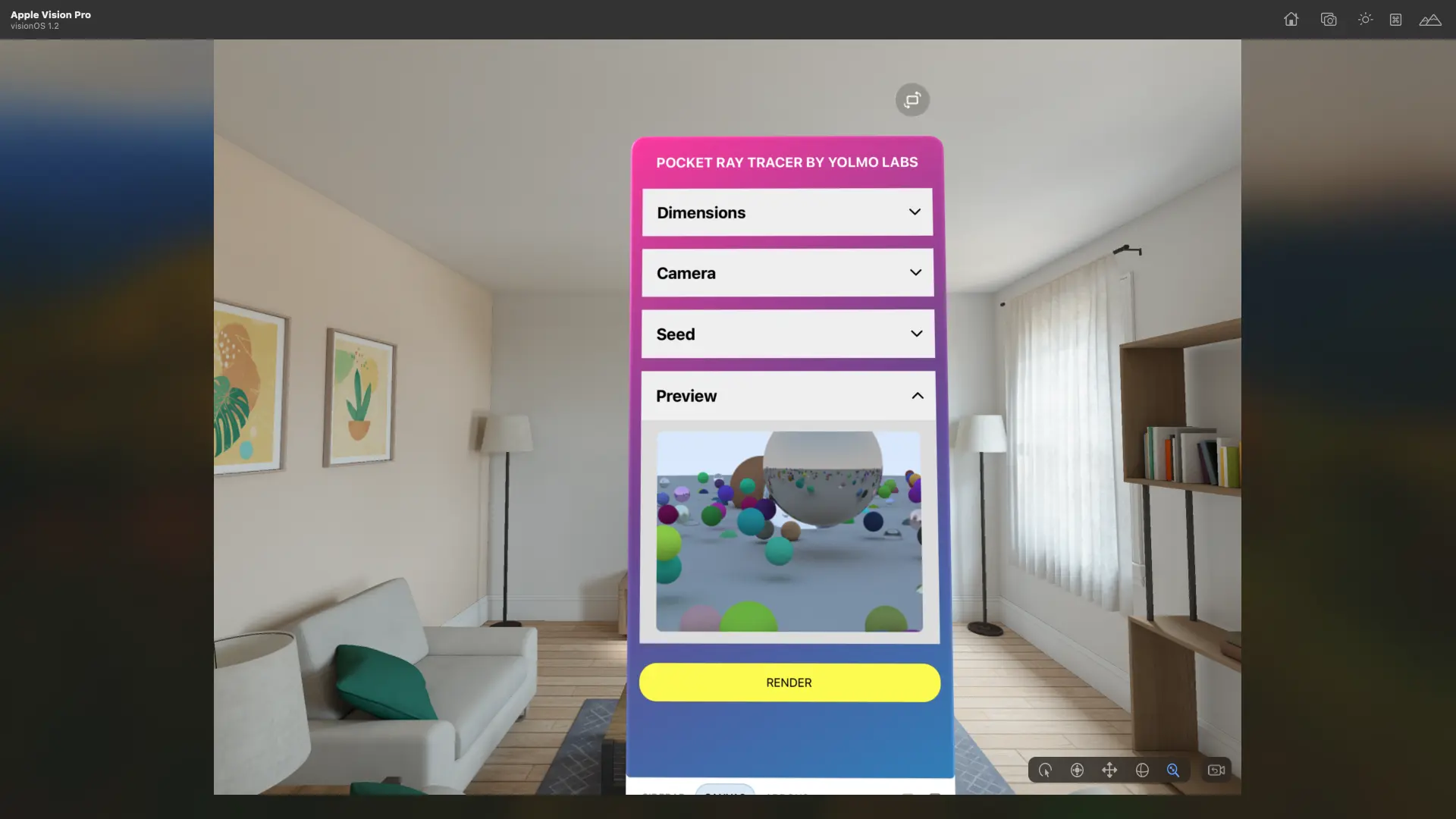

Apple Vision Pro

iPhone

What is a Ray Tracer

A ray tracer is a graphics tool that creates very lifelike images by imitating how light interacts with objects. It traces light rays from the viewer’s viewpoint back to the source of light and determines the color and brightness of each pixel based on the materials and light sources it meets in its path. This technique can mimic complex light effects like shadows, reflections, and bending of light, which is why it’s often used for creating high-quality 3D graphics in movies and games.

The Rust Ray Tracer

We’re using a high-quality ray tracer made in Rust for its effectiveness in simulating light paths to create real-life images. The source code is available on GitHub, showing how Rust can be used with React Native for heavy computational tasks.

The rust-raytracer, created by Stripe’s CTO David Singleton and inspired by Peter Shirley’s “Ray Tracing in One Weekend”, uses a scene graph to generate 3D images. For more insights into the development and capabilities of this ray tracer, you can read David Singleton’s blog post on the subject.

Understanding the Scene Graph

The Scene graph is a comprehensive plan for rendering a scene. It specifies the size of the image, the pixel sampling, and maximum depth, and includes a sky texture. A camera is positioned within the scene with a specific orientation, field of view, and aspect ratio. The scene also contains a variety of objects with different properties like spheres with different materials and textures, a light source, and some unique objects like a hollow sphere.

The table below shows an example of a scene graph.

| Property | Value | Description |

|---|---|---|

| width | 800 | Image width |

| height | 600 | Image height |

| samples_per_pixel | 128 | Pixel sampling rate |

| max_depth | 50 | Maximum depth for ray tracing |

| sky.texture | “data/beach.jpg” | Sky texture file |

| camera.look_from | {x: -2.0, y: 0.5, z: 1.0} | Camera origin |

| camera.look_at | {x: 0.0, y: 0.0, z: -1.0} | Point where the camera is looking |

| camera.vup | {x: 0.0, y: 1.0, z: 0.0} | Camera’s up vector |

| camera.vfov | 50.0 | Camera’s vertical field of view |

| camera.aspect | 1.3333333333333333 | Aspect ratio of the camera |

| objects | Various | Array of objects in the scene |

You can find a comprehensive representation of the scene graph in JSON format at this location - https://github.com/dps/rust-raytracer/blob/main/raytracer/data/test_scene.json

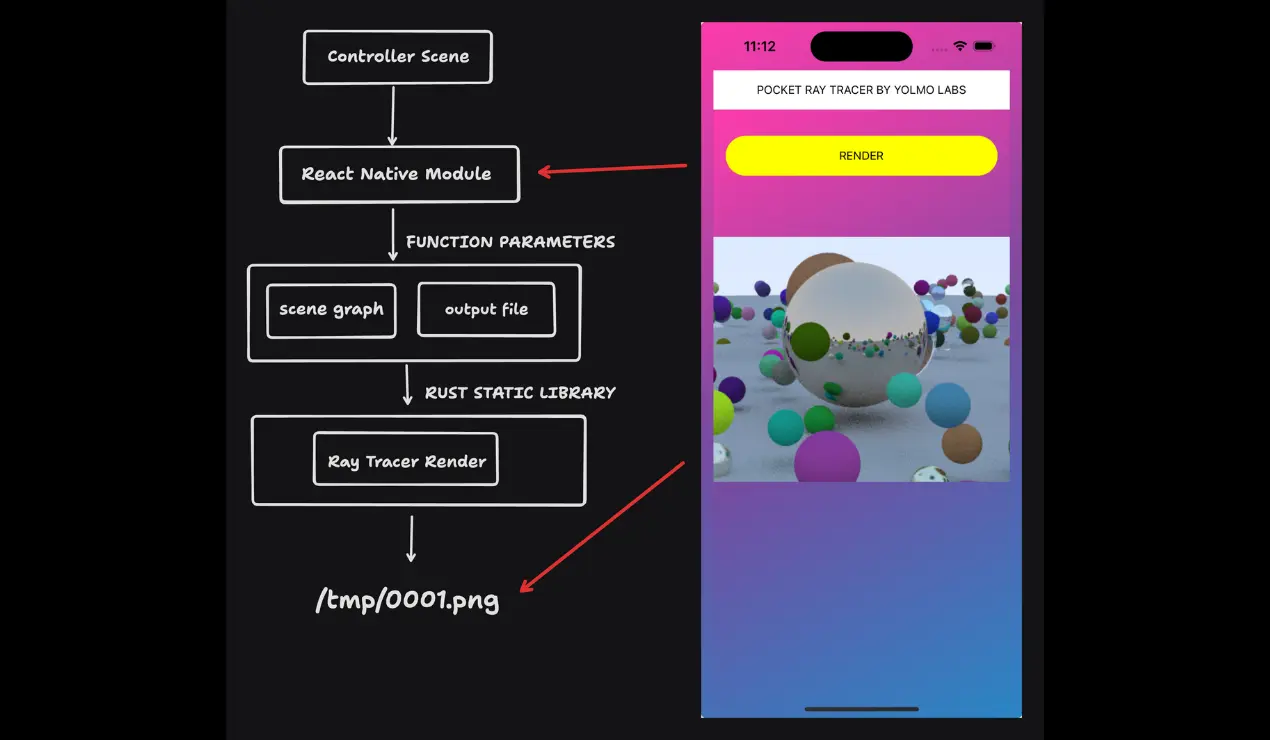

React Native Architecture for Integrating Rust Ray Tracer

The process starts when the user interacts with the user interface of the React Native app at the Controller Scene, triggering a rendering action. Once triggered, the React Native Module receives details about the scene graph and the location for the output file. It then initiates the Rust Static Library, which contains the Ray Tracer Render function responsible for the heavy computation of ray tracing to generate an image following the scene graph. The image is then saved to a predetermined file path, like /tmp/0001.png, making it ready for the React Native app to present the high-quality rendered scene to the user. This system takes advantage of Rust’s computational power and React Native’s adaptable user interface capabilities to create a robust mobile ray tracing app.

To learn more about Rust integration in react-native, follow along here.

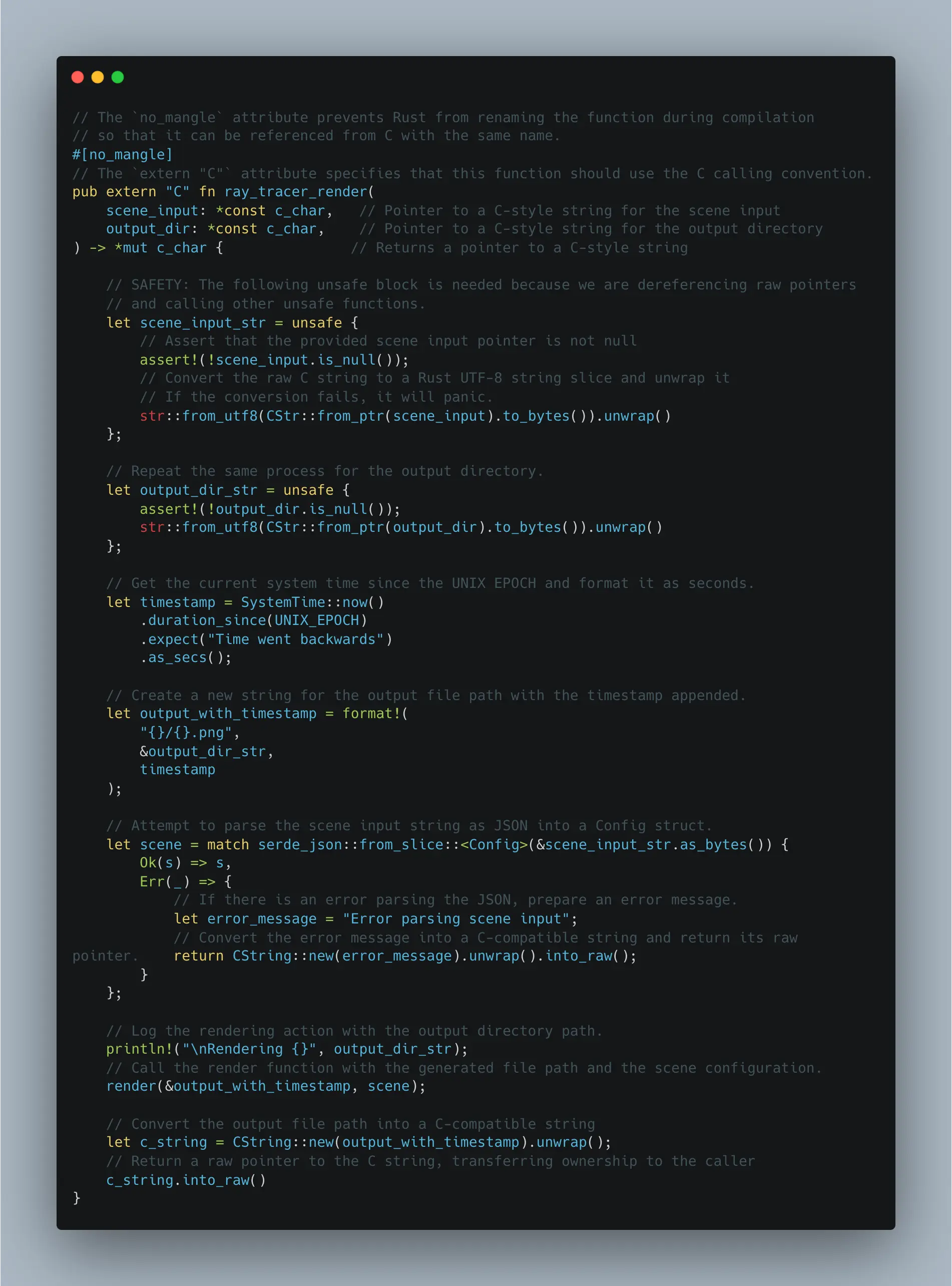

The Rust Ray Tracer Render Function

Understanding the Rust Ray Tracer Render Function

The ray_tracer_render function demonstrates how Rust can work with other languages and platforms, specifically using the C Foreign Function Interface (FFI). This function serves as a practical example of Rust’s interoperability.

Safe Interaction with Unsafe Code

The unsafe keyword in Rust is used to perform potentially risky operations, such as dereferencing raw pointers. This is needed when interacting with C code, where Rust’s safety measures can’t be applied. The function ensures that the pointers are not null before proceeding, demonstrating Rust’s capacity to balance safety with the versatility required for foundational system programming.

String Handling

The function changes C strings, represented as const c_char, into Rust’s String types. This makes it safer and easier to handle within Rust. The key role of this transformation is to process the scene setup and identify the output file path, which forms a connection between the code that initiates the call (probably written in a language with C-style strings, like C or C++) and the logic based on Rust.

Timestamping for Uniqueness

To prevent overwriting of previous renders, a timestamp is added to the output file name. This straightforward approach demonstrates how Rust’s standard library can be used to improve practical applications.

JSON Parsing

The scene setup, which is assumed to be a JSON string, is converted into a Rust structure called ‘Config’. This shows how Rust can interact with standard data exchange formats, making it useful for various applications and systems. If the parsing process encounters any issues, it handles them smoothly by returning a string that’s compatible with C. This highlights Rust’s capability to handle errors effectively and its overall reliability.

Rendering and Output

Lastly, the function activates the render method and provides the updated output file path and scene configuration as inputs. After the rendering process, it alters the output file path back into a format compatible with C, to be returned to the function that initiated the call. This complete procedure illustrates how Rust can be used for demanding tasks such as ray tracing and can generate results that can be utilized by software created in different programming languages.

Apple Vision Pro: Challenges and Considerations

The Pocket Ray Tracer app shown running on Apple Vision Pro demonstrates the potential of ray tracing in mixed reality devices. With simple controls for adjusting scene parameters and a real-time preview, it illustrates how complex rendering techniques can be optimized for mobile platforms. This integration of advanced graphics in wearable tech represents significant progress, balancing visual quality with performance constraints.

Future Prospects: Spatial Computing with LLM-Augmented AI

The Pocket Ray Tracer app on Apple Vision Pro demonstrates the potential of advanced graphics in spatial computing. Combining this ray tracing capability with embedded large language models like Microsoft’s Phi-3 could revolutionize mixed reality experiences. Such integration could enable on-device AI to dynamically generate and render photorealistic content, enhancing immersion in entertainment and education. This fusion of compact yet powerful AI models with sophisticated rendering techniques paves the way for more responsive and context-aware spatial computing applications, all while maintaining user privacy through on-device processing.

Conclusion

The combination of Rust and React Native enhances mobile app development by enabling high-performance graphics. This approach, previously difficult in cross-platform settings, allows the production of visually impressive ray-traced images. This progress suggests that mobile apps could potentially offer more engaging and visually superior experiences in the future.

comments powered by Disqus